Congratulations! You aced that interview a few weeks ago, and this morning you strolled into the office with a spring in your step! You had the HR induction and were introduced to your new colleagues. Now you’re logging onto the network, the company handbook reassuringly lying on the corner of your desk, or saved on your desktop.

Time to get started! The Company hired you to bring under control this thing almost mysteriously referred to as “Translations”. Your objectives are simple: reduce cost and improve quality. You are their first ever Localisation Manager, and you know the keys to your success will be the standardisation and centralisation of all Localisation activities.

So what do you need to consider from a technical and organisational point of view?

Getting to Know your Internal Customers

If there have been Translations in your Organisation, there are existing processes and linguistic assets you should be able to build on. You need to quickly learn about them by focussing on:

- Who are your allies? Each Department, Local Office etc. probably has at least one “Translation person”. Find out who they are and what they have been doing. Determine whether they will remain involved once you’ve established the new structure, or if they expect to be relieved of Localisation duties. All going well, you may be able to enroll some of them in an inter-departmental Localisation team, even if it’s only a virtual team.

- What is the inventory of current processes? Meet the current owners and document everything. No need for anything fancy since you are going to change these processes, but you need to have it all down so that when the inventory is finished you have an accurate and complete picture.

- What are the points common to all? Which of those processes work well and which don’t? The successful ones will be the building blocks for your future world.

- What are the specificities of each one? Which are worth keeping? Can they be used by other parts of the Organisation? Do they need to remain specific? Your new processes will need to achieve a balance between harmonisation and flexibility.

- Do any of those existing processes use technology such as CAT Tools, Content Management Systems, Translation Management Systems? If so should they be upscaled and shared across the Organization?

- Do any maintain linguistic assets like Glossaries, Style guides, Translation Memories or even just bilingual files which could be used to create TM’s?

Understanding your product lines

You need to understand what you are going to localise thoroughly before you can develop the processes. The question to answer are:

- What types of content: marketing, commercial website, Software, Help systems, self-service technical content, user-driven content like blogs etc. all those use very different registers, vocabulary, address etc. Moreover the choices made will differ again from one language to the next. Some content types require high volumes at low cost, such as Support content or product specifications. Some require high quality and creativity like Copywriting and Transcreation and you may even choose not to use TM’s for some of those. Some will be specific to parts of your Organisation while other will be global material. You will need to ensure a consistent Corporate identity across all these, in all languages.

- What are the fields: automotive, medical, IT require linguists with different backgrounds and specialisation. Make sure you know all the areas of expertise to cover during Translation and Review. For some you might to add Subject Matter Expert (SME) review to the more common step of Linguistic Review. Review changes will need to be implemented, communicated to Translators, fed into the TM’s, but the process will need to let SME’s take part in the process without having to learn CAT Tools.

- From a technical point of view you will also need to work with the content creators to determine the type of files you will receive from them and those they expect to receive back.

- Start a war on spread sheets as soon as possible. You probably won’t win it but the more you root out, the better. Teach your customers to understand how parsing rules protect their code by exposing only Localisable content during translation. Promote Localisation awareness during Development and Content creation. Document best practices such as avoiding hard-coded strings, providing enough space in the UI to accommodate the fact that some translations will be up to 30% longer than source text, at least if that is English.

- Your aim should be:

- to receive files that can go straight to Translation with minimum pre-processing

- to deliver files that your customers can drop into their build or repository for immediate use.

- No one should be doing any copy-paste engineering, manual renaming or file conversion.

Designing your Workflows

This can start with a pen and paper, a white board or whatever helps you think quicker, but it should end with a flowchart or set of flowcharts describing the process you’re setting up.

- Collaborate with your internal customers. You need to agree a signoff process, and avoid multiple source updates during or after the Translation process.

- Enumerate all the stages required and determine the following:

- How many workflows do you need to describe all scenarios? Try to find the right balance: fewer workflows ensures efficiency, but too few workflows will lead participants to implement their own sub-processes to achieve their goals and you will lose control and visibility.

- What stages do you need? The most common are:

- Pre-processing

- Translation

- Linguistic Review

- Post-Processing

- Visual QA

- Who are the owners of each step? Are they internal or external (i.e. colleagues or service providers)? How will you monitor progress and status? How will you pay?

- Is there a feedback loop and approval attached to certain steps? Will they prevent the workflow from advancing if certain criteria are not adhered to? Is there a limit to the number of iteration for certain loops?

- What automation can be put in place to remove human errors, bottle necks and “middle men” handling transactions.

Choosing your Vendors

Once you’ve determined which of your workflow steps need to be outsourced, you will need to select your providers. Linguistic vendors will likely be your most important choice.

Translation

In-house translators are a luxury rarely afforded. When choosing Translation vendors, first decide between Freelancers and Language Service Providers (LSP). Managing a pool of Translators is a job in itself, so most will hire the services of an LSP which will also be able to provide relief in terms of Project Management, Technology changes, Staff fluctuations depending on activity or holiday periods etc.. Having more than one LSP can be good strategic choice: it gives you more flexibility with scheduling and pricing. You can specialise your vendors according to content, region or strength. A certain amount of overlap is necessary for you to be able to compare their performance and benefit from a bit of healthy competition.

Linguistic review

Whichever setup you have for Translation, you will need linguistic review in order to ensure the integrity of the message is kept in the target languages. You will also need to ensure consistency between Translators or Agencies, check Terminology, maintain TM’s and Style guides.

Marketing and Local Sales Offices often get involved with that. However using internal staff removes them from their core tasks, unless you are lucky to have dedicated Reviewers. More than likely in-country colleagues will find it difficult to keep up with the volume and fluctuations of the Review work and ultimately will prove an unreliable resource. The solution is to hire the services of professional Reviewers. Many LSPs provide such services. Some ask their competing providers to review each other, but that often results in counter productive arguments. A third-party dedicated review vendor will be the best to enforce consistency, accurately measure quality, maintain linguistic assets, and even manage translator queries on your behalf.

Selecting Technology

Translation Memory technology is a must. Which one you go for may be determined or influenced by existing internal processes, particularly if there are linguistic assets (TM’s and Glossaries) in proprietary formats. Your vendors may also have a preferred technology or even propose to use their own. If you go down that road, make sure you own the linguistic assets. The file format is another choice that needs to be made carefully from the start. Open source formats may save you from being locked into one technology. However technology vendors often develop better functionalities for their proprietary formats. It can be a trade-off between productivity and compatibility.

The good news is that conversion between formats is almost always possible. This means migration between technologies is possible, but avoid including conversion as a routine part of the process. Even if it’s automated, having to routinely output TM in several formats for example, will introduce inefficiencies and increased user support requirements.

Translation Management Systems have become so common, some think they are on the way out. You will at the very least, need a Portal to support file transactions, and share your linguistic assets with all the participants in your supply chain. Emails, preferably automatic notifications, should be used to support the transactions, but they should be avoided when it comes to file swapping. FTP is a common option, easy to set up, learn and cheap to run, but it can soon turn into a mess and gives you zero Project Management visibility. In order to achieve efficient status monitoring, resource pooling and any type of automation, you should consider a Translation Management System.

Whether you go for the big guns like WorldServer or SDL TMS, or for something more agile like XTRF TMS, you will reduce the amount of bottle necks in your process: handoffs will go straight from one participant to the next. The Project Managers will still have visibility, but no one will have to wait on them to pass on the handoff before they get started. TM’s will be updated in real-time and new content will become re-usable immediately.

A few things to look out for in your selection:

- Less click = shorter kickoff time. Setting up Projects in a TMS is an investment. It is always going to be longer then dumping files on an FTP and emailing people to go get them if you look at an isolated Project. As soon as you start looking at a stream of Projects TMS makes complete sense. Still, a TMS’s worst enemy is how many clicks it needs to get going.

- Scalability: you need the ability to start small and deploy further, without worrying about licenses or bandwidth.

- Workflow designer: demand a visual interface, easy to customise which can be edited without having to hire the services of the technology provider. Don’t settle for anything that will leave out at the mercy of the landlord.

- Hosting: weigh your options carefully here again. In-house is good if you have the infrastructure and IT staff. But letting the Technology provider host the product may a more reliable option. This is their business after all, maybe you don’t need to reinvent the wheel on that one.

- User support: the cost and responsiveness of the Support service is essential. No matter how skillful you and your team are, once you deploy a TMS to dozens of individual linguists there will be a non-negligeable demand for training and support. Make sure this is provided for before it happens.

Once you’ve made all these decisions, you will be in good shape to start building and efficient Localisation process. Last but not least, don’t forget to decide whether to spell Localisation with an “s” or a “z”, and then stick to it! 🙂

Related articles:

Crowdsourcing in Localisation: Next Step or Major Faux Pas?

Globalization – The importance of thinking globally

SDL Trados 2007: Quick Guide for the Complete Beginner

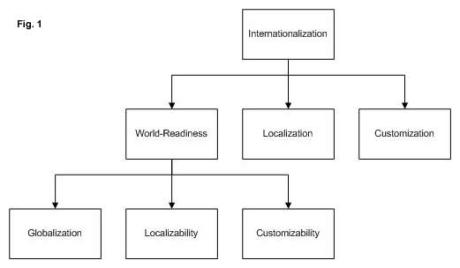

Which comes first, Globalization or Internationalization?

Who’s responsible for Localization in your organization?

One by one, SDL continue to address obstacles to our upgrade decisions. Earlier today, one of their webinars tackled the critical topic of Compatibility in Translation Supply Chain. A recording will be available at

One by one, SDL continue to address obstacles to our upgrade decisions. Earlier today, one of their webinars tackled the critical topic of Compatibility in Translation Supply Chain. A recording will be available at