Transcreation is used in high visibility content on Dell.com. In this example, the French banner has a more seductive tone, and replaces "Shop Now" with "Discover More"

Last summer Wayne Bourland, Director of Global localization at Dell, spoke about Machine Translation at the LocWorld Conference in Barcelona. He raised some very interesting points, which were later echoed in an article in MultiLingual (July-August 2011). The central idea was that MT was failing to gain traction for three reasons: clients not knowing how to buy it, Providers not knowing how to sell it, and Translators being reluctant to support it.

Wayne is an active member the Global Localisation community. He has been involved in developing best practises in the industry, sharing experiences with other Localisation consumers and developing sophisticated partnership with providers.

He has now accepted to revisit these ideas with us and discuss the outlook for MT. We’ll also take this chance to talk about other aspects of Localisation, such as Translation Management Systems and Translation Technology in general.

[Nick] Hi Wayne, thanks very much for agreeing to give some of your time to talk to Localization, Localisation. Could you start by giving us an overview of your career?

[Wayne] I came into this industry in an unconventional way. After a decade in the US Army I joined Dell, starting as a phone tech support agent. After moving into management I helped to establish call centers in India and South America before making a jump over to managing writers for support content. We had a small translation operation supporting tech support content in 7 languages. After being asked to take over the translation team we grew the team rapidly, moving into online content, then marketing, to where we are today supporting more than 90 different groups across Dell.

Machine Translation

Now let’s start with MT. Does MT still get more talk than action or have you observed an evolution in the last year? Has your team been driving changes in this area?

I think we are certainly seeing a groundswell. Jaap van der Meer with TAUS used to talk about 1000 MT engines, now he talks about 10s or 100s of thousands of them, trained very quickly and supporting a multitude of languages and domains. Every major client side translation leader I talk to is using MT in some way. Some are still toying with it, but many are investing heavily. Vendors have caught on to the growing demand and are either building their own capabilities or forging partnerships with MT providers. We are seeing groups from academia starting to see the value in commercializing their efforts. Soon we may have the problem of too much choice, but that’s on the whole a positive change for buyers. As far as the role my team is playing, we are doing what we have done for years, representing the enterprise client voice, discussing our perspective wherever we can (like here).

"If you go to Dell.com to purchase a laptop in France, or Germany for instance, the content you see is Post-Edited Machine Translation"

I know Dell has been using MT for a long time for Support content. Are you now able to use it for higher visibility content? Is MT effective for translating technical marketing material such as product specs and product webpages? Are more Localisation consumers ready to trust it?

Since May of last year we have been using MT with Post Edit in the purchase path of Dell.com. Meaning if you go to Dell.com to purchase a laptop in France, or Germany for instance, the content you see is PEMT. As of February of this year we are supporting 19 languages with PEMT. Yes, MT can be used for something other than support content. That’s not to say we have cracked the code, it still requires extensive Post Edit, we haven’t seen the level of productivity gains we had hoped yet, but we are making progress. Being on the cutting edge means dealing with setbacks.

I don’t think it’s a question of consumer trust. I think if you’re doing a good job of understanding the consumer need for your domain, and you measure your MT program against quality KPIs that mirror those expectations (v. relying simply on BLEU scores and the like to tell you how you are doing), then consumer trust won’t be an issue.

Which post-editing strategy produces the optimum results? Presumably it depends on the content type, but do you favour Post-Editing, Post-editing plus Review sampling, Post-Editing plus Full Review? What are the Quality assurance challenges specific to using MT?

I favour all of the above, each has their place. Following on to my previous answer, it’s about understanding the desired outcome. MT will be ubiquitous some day and people need to get used to it. You don’t start with picking the right process, you start with picking the right outcome, the appropriate balance of cost, time and quality, and you work backwards to the right process. If you’re supporting a large operation like I am, or just about any large enterprise client translation team, you’re going to need a number of different processes tuned to the desired outcomes for each content type. You build a suite of services and then pull in the right ones for each workflow. What we are doing on Dell.com today is PEMT with quality sampling. We made a decision that full QA (which we are moving away from for all translation streams) didn’t make sense when you factored in the cost and velocity goals we had. Of course, we have a great set of vendors and translators that make the PE work. Our quality standard has not changed.

Are LSP’s learning how to sell it? Is it finding its way into the standard offering of most providers or does it remain a specialists’ area only available for example in very big volume programs?

Wayne Bourland, Dell

I think some of them are. There are many LSPs out there who are still shying away from it, but the majority of your larger suppliers are getting the hang of it. They see the trends, they know that services, not words, is what will drive margin dollars, and MT is a big part of that service play. I wouldn’t say it’s standard yet though, it’s still handled as a completely separate conversation to traditional translation in many cases, but that is changing too. The more savvy LSPs are talking to clients about desired outcomes and how they can support that across the enterprise. The key is, at least for selling into large enterprises, you can’t be a speciality shop. Companies are increasingly moving to preferred supplier list, narrowing down the number of companies that they buy services from. So going out and picking 2 or 3 translation companies, and 2 or 3 MT providers, and a couple of transcreation specialist is happening less and less. Clients are looking for a handful of vendors who can bring all of these services, either organically or through partnerships.

You also expressed the opinion that the work of Translators would tend to polarise with high-end Transcreation type of work on one hand, and high-volume post-editing on the other. Are you observing further signs in that direction? How does the prospect of localising ever-expanding user-generated content such as blogs and social media fit into this view?

I think this still holds true, we can argue about when it happens, but at some point MT will be a part of nearly every translation workflow. Traditional translation work may not decrease, but the growth will be in MT and MT related services. I think user generated content is the domain of raw MT or even real time raw MT. Investing dollars and resources to translate content that you didn’t invest in creating in the first place really doesn’t make sense. Either the community will translate it, or interested parties can integrate MT to support their regional customers, but I can’t see a business case for any other form of translation for this domain of content.

Support sites were one of the earlier content types to adopt Machine Translation

Is the distinction between MT and TM loosing relevance? In mature setups, all content is highly leveraged. Often TM sequencing is used to maximise re-use even across content types, while taking into account the different levels of relevance. Post-editing and Review have to ensure any leveraged translation is the right translation in-context and at the time, regardless of its origin. In other words, once a match is fuzzy, does it matter whether it comes from human or machine translation?

It shouldn’t matter, and I think eventually it won’t, but it still does today, to my frustration. Translators still dislike MT, even in case studies where it has been shown that the MT output was performing better than TM fuzzy matching. And of course MT still has its challenges. We just aren’t there yet, I see them co-existing for some time to come, but eventually they will be one in the same for all practical purposes.

Translation Memory Technologies

What are the main advances you have observed in TM Technology over the last few years? Which are the most significant from the point of view of a Localisation consumer? Translator productivity tools such as predictive text? In-context live preview? The deployment of more TMS’s? The variety of file formats supported? Or perhaps the ability to integrate with CMS and Business Intelligence tools?

I won’t claim to be an expert on translation technology, but I really like in-context live preview and more TMS’s are starting to support it. Nothing beats seeing something as its going to be seen by the consumer for ensuring the most contextually accurate translation. I think all of the mentioned technologies have a place, but I am interested in tools that assist the translator. We have this crazy paradox in our industry where we have spent years trying to make human translators more machine like (increased productivity) and machines more human like (human quality MT). I think to a large degree we have neglected to innovate for the translator community. Too much time was spent trying to squeeze rates down and word counts up without really investing in the translator and their tools to facilitate this.

Wayne Bourland, Dell

By opposition, are there pain points you have been aware of for some time and are surprised are still a problem in 2012?

There are a number of them, TM cleaning is way more difficult than it should be and good tools to help are sparse. The differences in words counts between different tool sets is also challenging (a quote generated by one vendor can vary widely than one from another vendor for the same job and with similar rates due to large deltas in word count).

The ability to leverage from many Translation Memories and prioritise between them is in my opinion a must-have. Do you see any negative side to TM sequencing? Is the cost of managing linguistic assets a concern to customers?

I think one potential negative to TM sequencing is it allows people to get lazy with TM management. Simply adding more TMs to the sequence doesn’t ensure success. The cost for managing linguistic assets is a concern, although I think we don’t always realize how big of a concern it should be. As mentioned above, TM cleaning is costly and time-consuming, but necessary. Clients and SLVs alike should put TM maintenance on the annual calendar, ensure at the least some time is devoted to reviewing the strategy. There is a lot of lost cost and quality opportunity in good TM management. It’s something I don’t think we do nearly well enough.

How about TM Penalties? Do you see a use for them as a part of Quality Management strategy, or are they a cost factor with little practical use to the customer?

I think they have a purpose, if you know one set of TMs is more appropriate for your domain you want to ensure it is used first for segment matching, however, it should be used cautiously. We penalized a TM when we shouldn’t have and it cost us a large amount of money before we figured it out. Hence the need to review your TM strategy periodically and also watch leverage trending!

I see source Content Management, or Quality control during the creation of the source content, as a key to quality and cost control in Translation. Can you tell us about what you have observed? How is source quality controlled at Dell? Do you have any insight into the process of choosing and setting up Source Content tools with Localization in mind?

I agree there is huge potential in controlling the upstream content creation process. It’s also, for many of us, very difficult to do. You’re starting to see a lot of clients and LSPs do more here. It’s another one of those services that SLVs can build into their suite to derive revenue from non-word sources. It’s also an area where translation teams can show innovation and have a larger impact on company objectives. We are in the process of implementing Acrolinx with several of our content contributors. I think the key is getting buy-in from the authoring teams and their management. You have to be able to demonstrate how this helps them and the bottom line.

Are Terminology and Search Optimization Keywords managed in an integrated manner, from the source content creation to the Localised content deployment?

Wayne Bourland, Dell

You’re kidding right? I know you’re not, and it’s a really important topic, but no, we don’t do it in an integrated manner today and I think many of us are struggling to figure this one out. We are piloting an Acrolinx add-on that targets localized SEO, but I think a lot of education is needed for companies to understand the potential here.

Translation Management Systems

Your team uses a Translation Management System to collaborate with vendors and their linguists. What is your overall level of satisfaction with the technology?

I haven’t talked to a single large enterprise client who is “satisfied” with their TMS. That’s not to say that everyone is unhappy, but many of us have had to invest a great deal of time and money into fitting the TMS into our ecosystems. The lack of observed standards exacerbates the problem. I don’t know what the solution is here, more competition would help, but it isn’t a silver bullet. Perhaps more interest from major CMS players would help drive innovation here. The CMS industry is much larger than the TMS industry, and integrations are becoming more and more common place. We will have to wait and see. I do know that user communities have formed around many of the larger TMS offerings, and I think the shared voice of many customers will help to push for the right changes. If you’re not participating in one of these now, I would encourage you to do so!

When purchasing enterprise solutions it can be difficult to accurately estimate the financial benefits. Providers will often help potential buyers put to together an ROI. With the benefit of hindsight, would you be able to share with us how long it took for your Translation Management System to pay for itself in cost saving? How did that compare to the ROI estimated at the time of the original investment.

I wasn’t a party to the purchase and implementation of our current solution. I am aware of the cost, but not the promised ROI. However, I can say that it probably paid for itself in year 2, due more to the volume ramp than anything else. I would certainly say utilizing our TMS solution more than pays for the on-going maintenance. I do know that moving between TMS’s, when you consider the level of integration we have, would be daunting and the ROI would have to be very large and very attainable.

Online Sales sites re-use large amounts of existing translations thanks to TMs

Which would be your top 5 criteria if you were to purchase a new Workflow System today?

1- It would have to support multiple vendors

2- Have a robust API for integrating with varied CMSs

3- Support all modern document formats (CS 5.x, Office 2010, etc.)

4- Cloud based and scaleable

5- Easy client-side administration

There are probably 100 more factors….

I’ve come across a number of relatively news TMS’s recently. They often have some nice new features, and friendlier, more modern user interfaces. But I find they tend to lack features users of the more established systems would take for granted: TM sequencing, the ability to work offline or even download word count reports are not necessarily part of the package. Have you had opportunities to compare different systems? If so what was your impression?

We are so tightly integrated with a number of CMSs that we have not been in the position to look at other options. I think that is the key challenge for companies selling TMSs, how do you break the lock-in.

The upgrade process for TMS systems is sometimes difficult because of the vast number of users involved or the automation and development effort which may have been done to connect to Content Management Systems, Financial Systems, Portals etc. Is that also your experience? Can you tell us about your process for minor and major upgrades?

We feel this pain often. We have rarely had an upgrade that didn’t spawn additional issues or downtime. We have worked with IT and the tool supplier to setup regression testing, testing environments, upgrade procedures, failure protocols, etc. but it still seems we can’t pull off a seamless launch, primarily due to a failure of some sort on the supplier side. It’s frustrating, and many of my peers are having the same experience.

In the domain of Quality Control, the availability of QA Models in TMS’s seemed like a major development one or two years ago. Yet I find they are not actively rolled out, and offline spread sheet-based Quality Reports have proven resilient. Is that also your experience? And do you think the trends towards more flexible and content-specific quality measurement systems like that of TAUS, particularly in the area of gaming, make online LISA-type QA models more or less adequate?

Wayne Bourland, Dell

We championed the inclusion of a QA system in our current TMS and don’t use it. We found that it just wasn’t robust enough to handle all of the different scenarios. We still use spreadsheets; it has worked for years and probably will for many more. We are participating with TAUS on their new proposed quality model and I am anxious to see where it goes, I think the use of the content and the audience plays a big role and are ignored in quality models today that just look at linguistics. Customers don’t care about linguistics, they care about readability and if the content talks to them or not.

Do you know the proportions of Translators and Reviewers respectively, working online and offline on your production chain? Is this proportion changing or stable? What do you think would be the deciding factor to finally getting all linguists to work online?

I think it is about 50/50 right now, but that’s really more a difference in how our different vendors work than tools or process. I don’t see it changing in the near term, but I would like to see more move online, I think there is opportunity for quicker leverage realization and other enhancements that make a completely online environment look attractive.

Conclusion

As you probably know, Ireland has had a pretty rough ride in recent years. But the Localisation industry is doing comparatively well. What are the main factors to explain Ireland’s prominent place on the Localisation Industry. Many companies have their decision centres and supplier partnerships setup from Dublin when it comes to Localisation. Do you think this will continue in the future?

Now we are really going outside my area of expertise. I think Ireland’s location (in Europe), its influx of immigrants with language skills, the strong presence of language and MT in Academia, and of course, the resilience and work ethic of the Irish all serve to make Ireland a great place for the language services industry. I don’t see that changing anytime soon. Hopefully not, I do love my bi-annual trips to Dublin! Coincidentally, I am typing these answers on the plane to Dublin. I can taste the Guinness now. 🙂

Wayne will participate to two discussions at this year’s LocWorld Conference in Paris, June 4-6: one about Dell’s Self Certification program for Translators and one about Multilingual Terminology and Search Optimisation. Self Certification is a concept implemented by Dell where instead of having Translation and then QA, Translators perform a few additional QA steps to certify their own work. This removes any bottleneck due to a full-on QA step. Independent weekly sampling and scoring are used to monitor the system, but is not a part of the actual production chain.

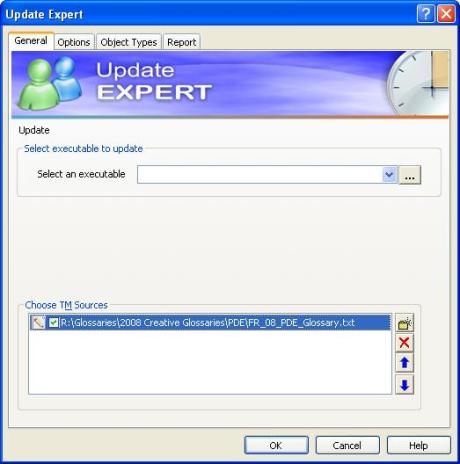

One by one, SDL continue to address obstacles to our upgrade decisions. Earlier today, one of their webinars tackled the critical topic of Compatibility in Translation Supply Chain. A recording will be available at

One by one, SDL continue to address obstacles to our upgrade decisions. Earlier today, one of their webinars tackled the critical topic of Compatibility in Translation Supply Chain. A recording will be available at